Overview

At Datraxa, we designed a deep learning system to detect and classify facial emotions in real time using both images and video feeds.

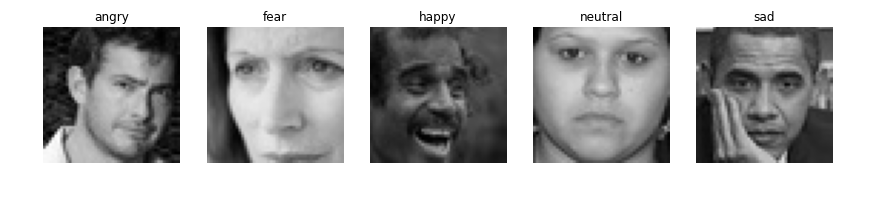

The model was trained on the FER2013 dataset and identifies emotions such as Angry, Happy, Neutral, Sad, and Fear using a Convolutional Neural Network (CNN) inspired by the VGG architecture.

To make it more adaptable for wellness and safety applications, the model was simplified to classify emotions into two main categories:

✅ Okay and ⚠️ Not Okay — ideal for user experience monitoring, mental health tracking, and smart surveillance.

🔍 Key Features

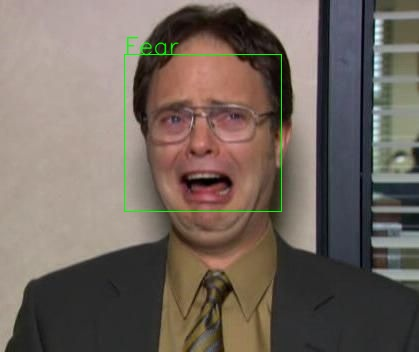

- Real-Time Emotion Detection:

Captures and analyzes facial emotions directly from webcam feeds usingFER_video.py. - Image-Based Classification:

Classifies emotions in static images and saves results (with face coordinates) in a structured text log. - Advanced CNN Architecture:

Uses a VGG-inspired Convolutional Neural Network with data augmentation for improved generalization. - High Accuracy:

Achieved an impressive 92.5% test accuracy on the challenging FER2013 dataset. - Database Integration:

Stores predictions, facial coordinates, timestamps, and labels in a MySQL database for later analytics.

⚙️ Technology Stack

- Python

- TensorFlow

- OpenCV

- MySQL

- CNN (Convolutional Neural Network)

- FER2013 Dataset

💼 Use Cases

- Mental Health Monitoring: Detect emotional states in real time to identify stress or discomfort.

- Human-Computer Interaction: Enhance UX by adapting responses to users’ emotional cues.

- Smart Surveillance: Flag unusual emotional patterns for proactive security monitoring.

- Education & Engagement Tracking: Track classroom or audience engagement through emotion analysis.

The project demonstrates how AI can understand human emotion through data-driven modeling. By merging computer vision and psychological insights, Datraxa’s system supports applications in healthcare, education, and human-centered AI design. It’s a step toward creating smarter, more empathetic technology that responds to people—not just inputs.

🚀 Outcome

The system delivers accurate, real-time facial emotion recognition, bridging the gap between AI and human emotion understanding.

With its adaptability and precision, it serves as a powerful foundation for emotion-aware automation systems, safety platforms, and interactive applications.

Datraxa — Bringing emotion intelligence to modern AI systems.